DateSync

A Multimodal System for Emotion-Aware Dating Insights

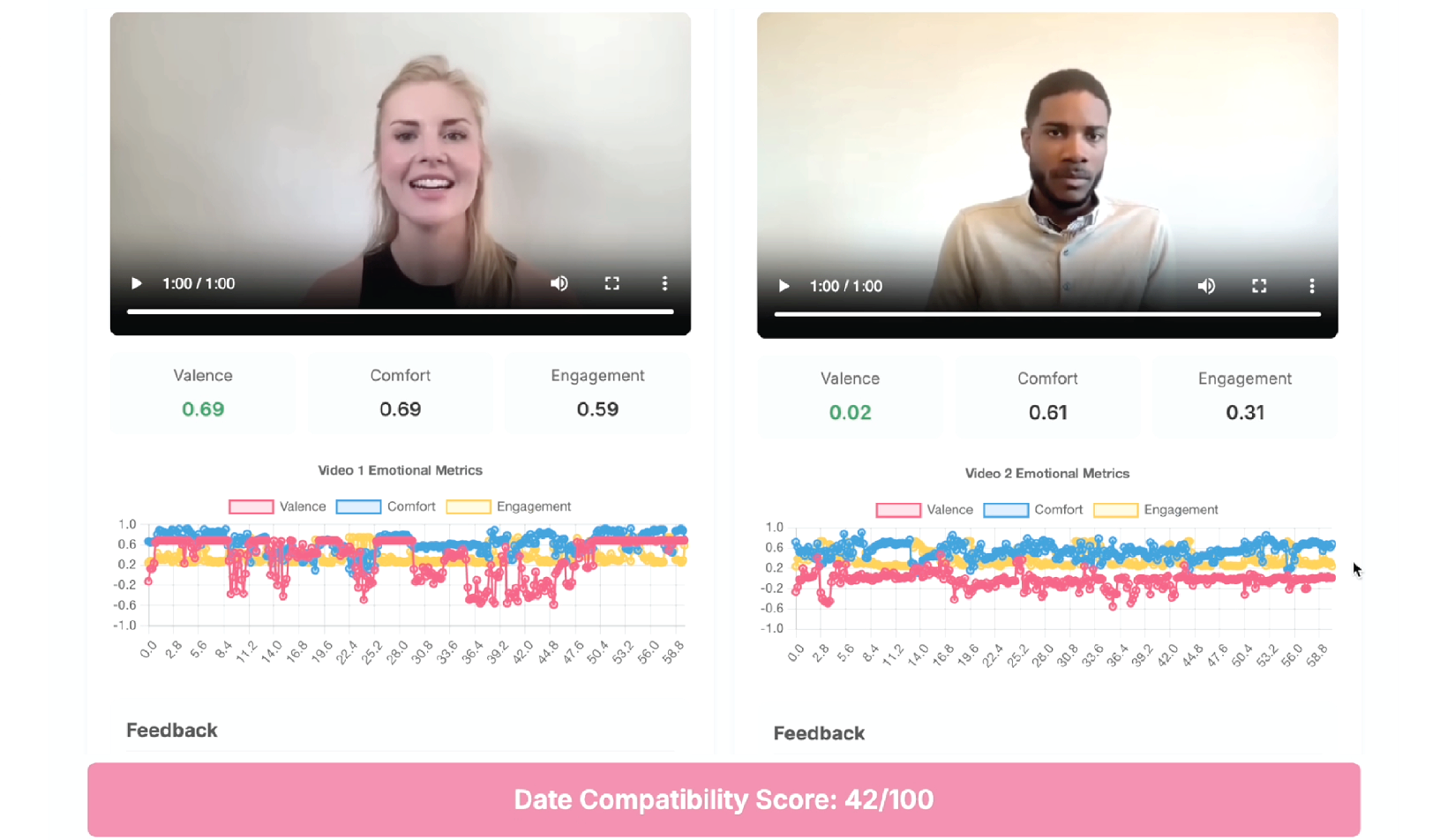

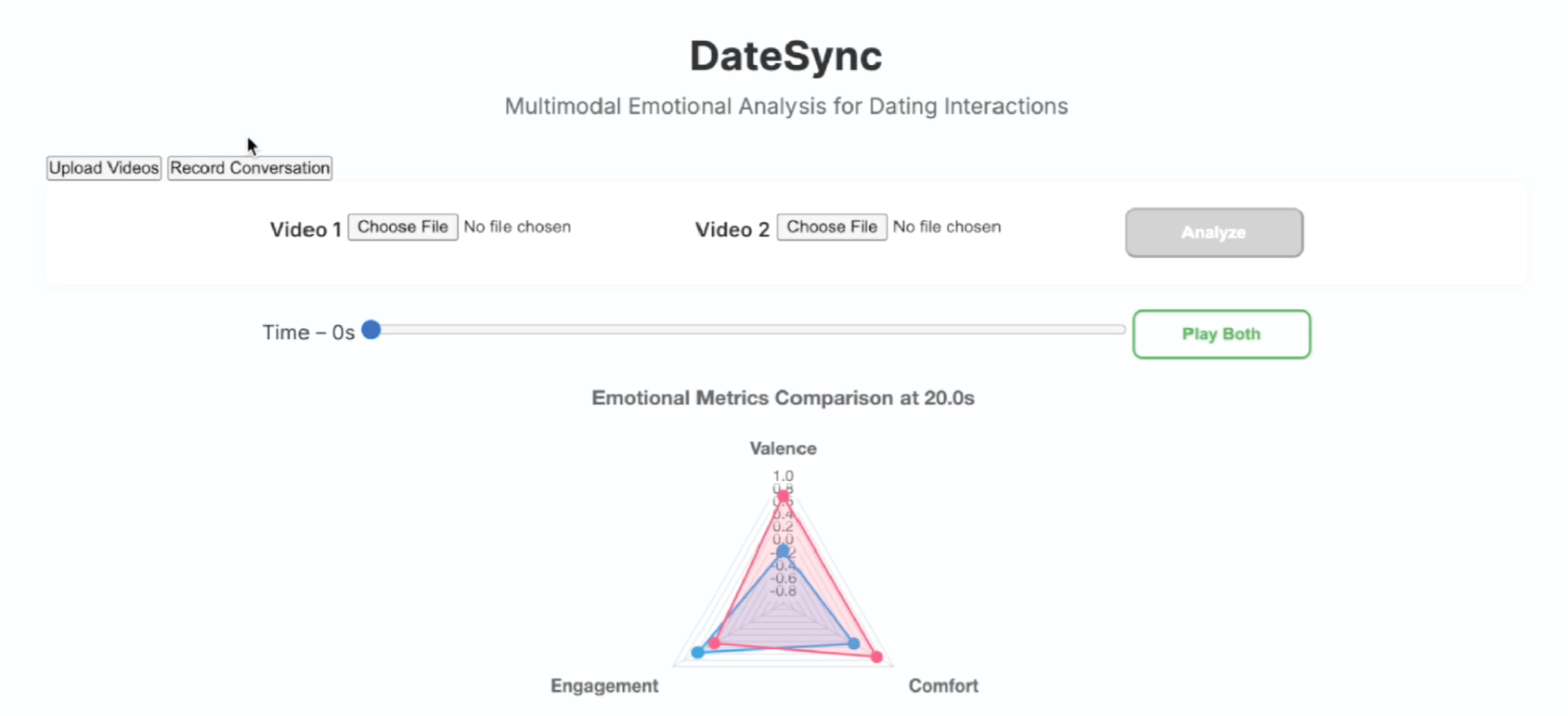

DateSync is a multimodal emotional analysis system designed to help users better understand how emotions are expressed, perceived, and interpreted during dating interactions. The system synthesizes visual, audio, and textual emotional cues to generate a compatibility score, offering insights into the emotional alignment between two participants. By analyzing facial expressions, vocal tone, and speech content, DateSync provides actionable feedback to improve communication and enhance dating experiences. The system achieves good accuracy in detecting three key emotional indicators: emotional valence, comfort level, and engagement level, as validated through user testing with dating pairs.

This project demonstrates how multimodal analysis can create more meaningful insights into interpersonal dynamics than single-modality approaches. Despite promising results, challenges related to cross-cultural emotion recognition, real-time latency, and user privacy remain. Future improvements aim to address these areas through model fine-tuning, lightweight client-side models, and privacy-preserving architectures.

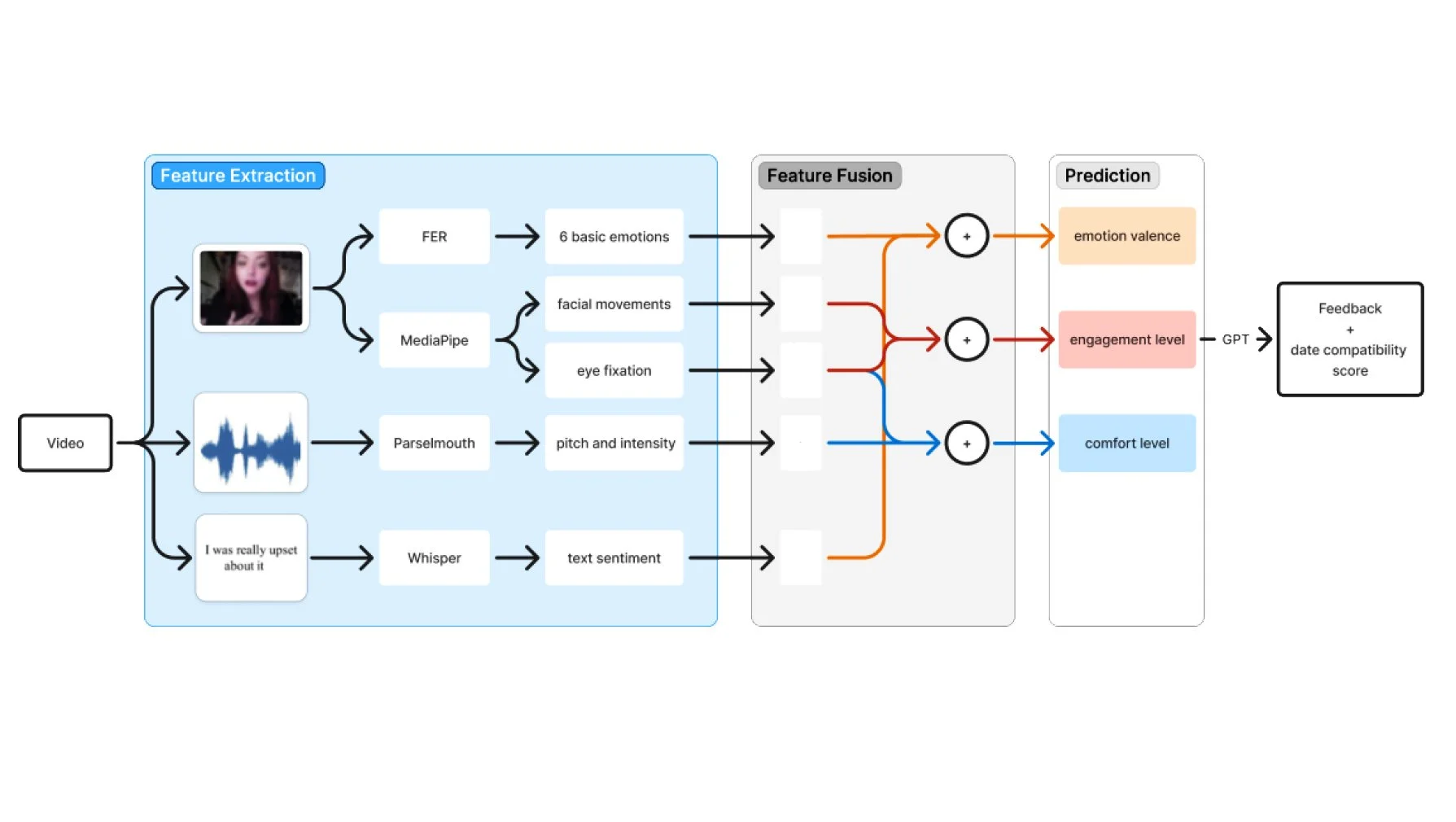

DateSync's architecture consists of several interconnected components:

1. Video Input Processing: Video and audio extraction/ Frame extraction at 5 FPS/ Audio-to-text transcription

2. Modality-Specific Feature Extraction:

Visual Pipeline: FER (Facial Emotion Recognition) for basic emotions and MediaPipe for facial movements and eye tracking

Audio Pipeline: Parselmouth for extracting pitch and intensity features

Text Pipeline: Whisper for transcription and GPT for sentiment analysis

3. Feature Alignment: Synchronization of features across modalities

4. Feature Fusion: Weighted combination of features from different modalities

5. Prediction Layer: Generation of core emotional metrics

6. Feedback Generation: AI-powered analysis and suggestions

7. Compatibility Calculation: For two-person videos